Greetings lovely community,

Not sure exactly how to classify this issue (mods feel free to change the title to better reflect the topic if applicable), but a couple days ago I noticed when I reboot or shutdown that some extra messages have been showing up in Grub and at this point I’m not sure if it requires further investigation on my part or if it can be safely ignored. Now, normally my laptop will display 2 quick messages before a reboot/shutdown and I know these messages are normal and are not cause for concern; they are as follows:

watchdog: watchdog0: watchdog did not stop!

watchdog: watchdog0: watchdog did not stop!

Typically those were the only messages I’d see after I clicked on reboot or shutdown on my Gnome desktop. Perhaps due to a recent systemd or kernel update or perhaps something I’ve done recently, but now I get a few extra messages after a reboot/shutdown and this is displayed the same across the linux, linux-lts, and linux zen kernels. This leads me to believe it’s not a kernel specific issue, but perhaps systemd or something I’ve done recently. The error messages I see now after every reboot/shutdown is as follows:

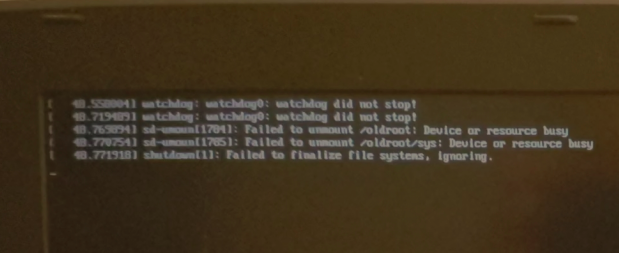

[ 40.550004] watchdog: watchdog0: watchdog did not stop!

[ 40.719409] watchdog: watchdog0: watchdog did not stop!

[ 40.769894] sd-unmoun[1704]: Failed to unmount /oldroot: Device or resource busy

[ 40.770754] sd-unomun[1785]: Failed to unmount /oldroot/sys: Device or resource busy

[ 40.771918] shutdown[1]: Failed to finalize file systems, ignoring.

Now at the moment I can say I haven’t done or changed anything to my system, other than simply running yay updates every couple days and using Pika backup to backup my files and Timeshift to backup my system files. For Pika backup, I have an 2TB SSD Seagate, which I haven’t had a single issue with, and I’ve been using that for over a year now. Only since installing EndeavourOS did I start using Timeshift, and for that I use a small 64GB SanDisk thumb drive to backup my system files. I backup both once a day at night, and once the backup is complete (usually 1-5 mins), I remove the drives from my laptop. I mention these because I wonder if my system thinks that one of these devices wasn’t removed and thinks it’s still busy, but I am not sure about anything more than that at this point, so I come to the guidance of this community for some help!

Please feel free to ask me for any additional logs or any commands to run and I’ll post the outputs if need be. Below is just a quick shot I got of the shutdown message I’m referring to if that helps at all. Thank you for your time and thanks for reading this post, any and all help is always much appreciated.

Edit: I’ll add my inxi outout below:

[scott@endeavourOS ~]$ inxi -Fxxxza --no-host

System:

Kernel: 5.14.12-arch1-1 x86_64 bits: 64 compiler: gcc v: 11.1.0

parameters: BOOT_IMAGE=/boot/vmlinuz-linux

root=UUID=2c6a8f39-939c-47a4-9d95-dabf69e6f5c6 rw nvidia-drm.modeset=1

quiet loglevel=3 nowatchdog

Desktop: GNOME 40.5 tk: GTK 3.24.30 wm: gnome-shell dm: GDM 40.0

Distro: EndeavourOS base: Arch Linux

Machine:

Type: Laptop System: Acer product: Aspire E5-576G v: V1.32 serial: <filter>

Mobo: KBL model: Ironman_SK v: V1.32 serial: <filter> UEFI: Insyde v: 1.32

date: 10/24/2017

Battery:

ID-1: BAT1 charge: 16.4 Wh (100.0%) condition: 16.4/62.2 Wh (26.4%)

volts: 12.6 min: 11.1 model: PANASONIC AS16B5J type: Li-ion

serial: <filter> status: Full

CPU:

Info: Quad Core model: Intel Core i5-8250U bits: 64 type: MT MCP

arch: Kaby Lake note: check family: 6 model-id: 8E (142) stepping: A (10)

microcode: EA cache: L2: 6 MiB

flags: avx avx2 lm nx pae sse sse2 sse3 sse4_1 sse4_2 ssse3 vmx

bogomips: 28808

Speed: 800 MHz min/max: 400/3400 MHz Core speeds (MHz): 1: 800 2: 2068

3: 952 4: 1551 5: 931 6: 800 7: 1068 8: 2074

Vulnerabilities: Type: itlb_multihit status: KVM: VMX disabled

Type: l1tf

mitigation: PTE Inversion; VMX: conditional cache flushes, SMT vulnerable

Type: mds mitigation: Clear CPU buffers; SMT vulnerable

Type: meltdown mitigation: PTI

Type: spec_store_bypass

mitigation: Speculative Store Bypass disabled via prctl and seccomp

Type: spectre_v1

mitigation: usercopy/swapgs barriers and __user pointer sanitization

Type: spectre_v2 mitigation: Full generic retpoline, IBPB: conditional,

IBRS_FW, STIBP: conditional, RSB filling

Type: srbds mitigation: Microcode

Type: tsx_async_abort status: Not affected

Graphics:

Device-1: Intel UHD Graphics 620 vendor: Acer Incorporated ALI driver: i915

v: kernel bus-ID: 00:02.0 chip-ID: 8086:5917 class-ID: 0300

Device-2: NVIDIA GP108M [GeForce MX150] vendor: Acer Incorporated ALI

driver: nvidia v: 470.74 alternate: nouveau,nvidia_drm bus-ID: 01:00.0

chip-ID: 10de:1d10 class-ID: 0302

Device-3: Chicony HD WebCam type: USB driver: uvcvideo bus-ID: 1-7:5

chip-ID: 04f2:b571 class-ID: 0e02

Display: x11 server: X.org 1.20.13 compositor: gnome-shell driver:

loaded: modesetting,nvidia resolution: <missing: xdpyinfo>

OpenGL: renderer: NVIDIA GeForce MX150/PCIe/SSE2 v: 4.6.0 NVIDIA 470.74

direct render: Yes

Audio:

Device-1: Intel Sunrise Point-LP HD Audio vendor: Acer Incorporated ALI

driver: snd_hda_intel v: kernel alternate: snd_soc_skl bus-ID: 00:1f.3

chip-ID: 8086:9d71 class-ID: 0403

Sound Server-1: ALSA v: k5.14.12-arch1-1 running: yes

Sound Server-2: JACK v: 1.9.19 running: no

Sound Server-3: PulseAudio v: 15.0 running: yes

Sound Server-4: PipeWire v: 0.3.38 running: yes

Network:

Device-1: Intel Dual Band Wireless-AC 3168NGW [Stone Peak] driver: iwlwifi

v: kernel port: 4000 bus-ID: 03:00.0 chip-ID: 8086:24fb class-ID: 0280

IF: wlan0 state: up mac: <filter>

Device-2: Realtek RTL8111/8168/8411 PCI Express Gigabit Ethernet

vendor: Acer Incorporated ALI driver: r8168 v: 8.049.02-NAPI modules: r8169

port: 3000 bus-ID: 04:00.1 chip-ID: 10ec:8168 class-ID: 0200

IF: enp4s0f1 state: down mac: <filter>

Bluetooth:

Device-1: Intel Wireless-AC 3168 Bluetooth type: USB driver: btusb v: 0.8

bus-ID: 1-5:4 chip-ID: 8087:0aa7 class-ID: e001

Report: rfkill ID: hci0 rfk-id: 0 state: up address: see --recommends

Drives:

Local Storage: total: 238.47 GiB used: 158.15 GiB (66.3%)

SMART Message: Unable to run smartctl. Root privileges required.

ID-1: /dev/sda maj-min: 8:0 vendor: SK Hynix model: HFS256G39TND-N210A

size: 238.47 GiB block-size: physical: 4096 B logical: 512 B

speed: 6.0 Gb/s type: SSD serial: <filter> rev: 1P10 scheme: GPT

Partition:

ID-1: / raw-size: 237.97 GiB size: 233.17 GiB (97.99%)

used: 126.5 GiB (54.3%) fs: ext4 dev: /dev/sda2 maj-min: 8:2

ID-2: /boot/efi raw-size: 512 MiB size: 511 MiB (99.80%)

used: 296 KiB (0.1%) fs: vfat dev: /dev/sda1 maj-min: 8:1

Swap:

Kernel: swappiness: 60 (default) cache-pressure: 100 (default)

ID-1: swap-1 type: file size: 512 MiB used: 0 KiB (0.0%) priority: -2

file: /swapfile

Sensors:

System Temperatures: cpu: 54.0 C mobo: N/A gpu: nvidia temp: 45 C

Fan Speeds (RPM): N/A

Info:

Processes: 275 Uptime: 45m wakeups: 1 Memory: 15.51 GiB

used: 2.53 GiB (16.3%) Init: systemd v: 249 tool: systemctl Compilers:

gcc: 11.1.0 Packages: pacman: 1279 lib: 294 flatpak: 0 Shell: Bash v: 5.1.8

running-in: tilix inxi: 3.3.06

Edit2: I’ll add logs for lsblk -fm and /etc/fstab if that helps:

[scott@endeavourOS ~]$ lsblk -fm

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS SIZE OWNER GROUP MODE

sda 238.5G root disk brw-rw----

├─sda1

│ vfat FAT32 NO_LABEL

│ BB5D-4A9F 510.7M 0% /boot/efi 512M root disk brw-rw----

└─sda2

ext4 1.0 2c6a8f39-939c-47a4-9d95-dabf69e6f5c6 94.8G 54% / 238G root disk brw-rw----

sr0 1024M root optic brw-rw----

[scott@endeavourOS ~]$

GNU nano 5.9 /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=BB5D-4A9F /boot/efi vfat umask=0077 0 2

UUID=2c6a8f39-939c-47a4-9d95-dabf69e6f5c6 / ext4 defaults,noatime 0 1

/swapfile swap swap defaults,noatime 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0