DISCLAIMER: I have spent limited time with bcachefs so it is possible I am misunderstanding some of it

DISCLAIMER 2: When I describe how things work I am referring to how they present in user space and am not implying anything about the underlying implementation

DISCLAIMER 3: The focus of my investigation was subvolume and snapshot handling because that is important to my use case. However, there are lots of other important things about a filesystem in addition to this one topic.

I recently took a look at bcachefs because I was hoping it might be an improvement over btrfs with subvolume and snapshot handing. Ultimately, I was wanting to see if it could be a replacement for zfs. If you want the short version, it isn’t. If you have time and interest for the longer version, feel free to keep reading.

First, I need to explain my personal challenges with btrfs snapshots to provide some perspective.

Btrfs snapshots store minimal metadata and are only loosely hierarchical. This presents several problems in practical use.

- In many situations it is impossible to tell which subvolume the snapshot came from

- There is no practical difference between a snapshot and subvolume, snapshots basically are subvolumes

- snapshots can only be created at a location within the mounted filesystem

- You can only take snapshots of mounted subvolumes

From a management perspective this creates challenges. For example

- It is impossible to create generic tooling to restore snapshots(because there is no way to know where they should be restored to)

- To take snapshots you may need to dynamically mount parts of the filesystem the user doesn’t want mounted

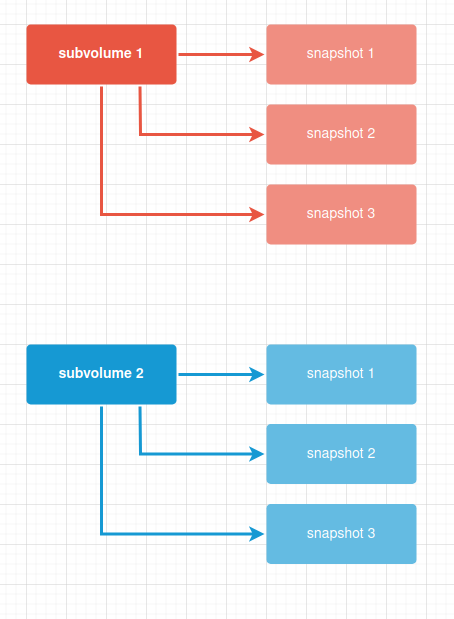

Just to get an idea of what I am referring to, consider a situation where there are two subvolumes, each with three snapshots

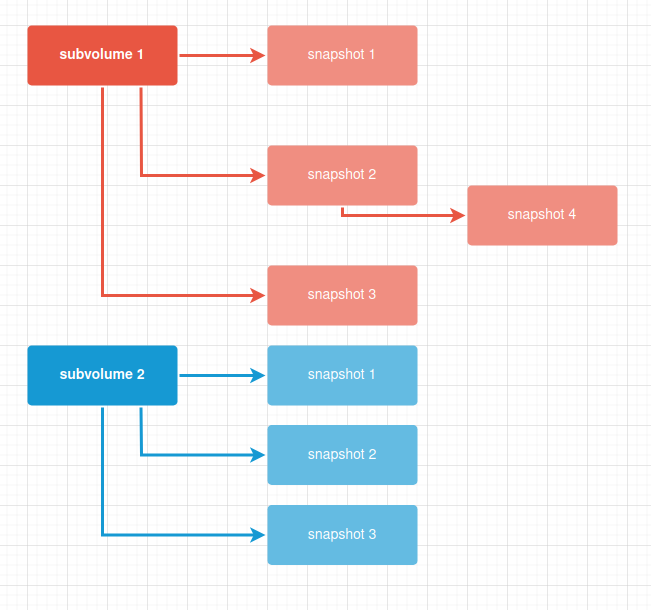

Now, let’s say we restore snapshot 2 of subvolume 1 and then take a new snapshot. You might be expecting the result would be something like this

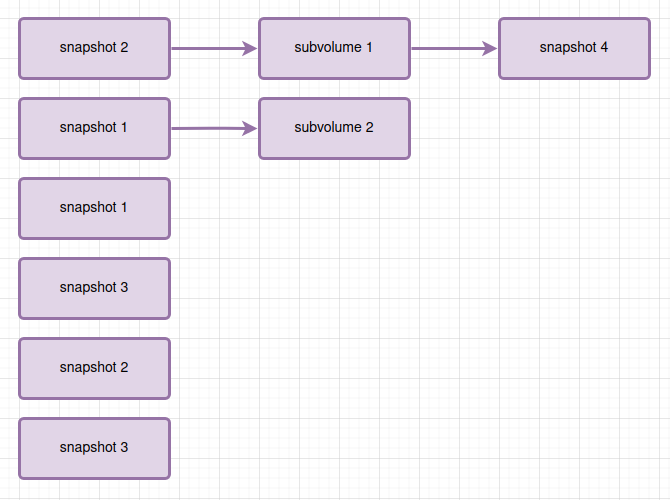

Unfortunately, the end result in btrfs is this

Why is that? Because btrfs subvolumes only track their immediate parent. Once you remove the parent the children all become orphaned.

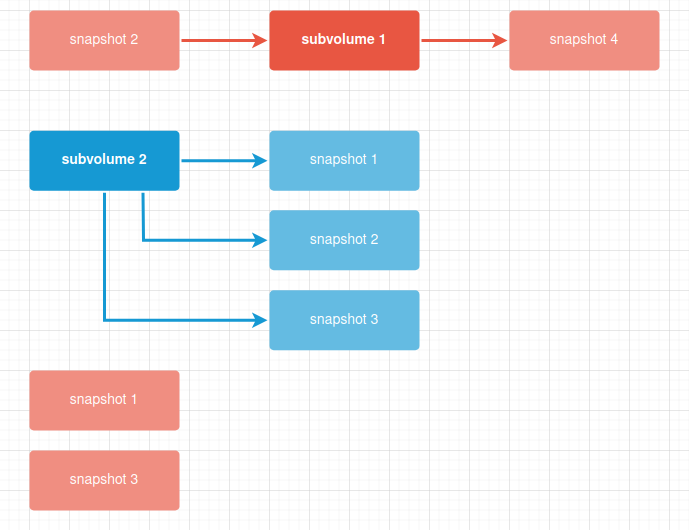

If we restore a snapshot in subvolume 2 we end up with this

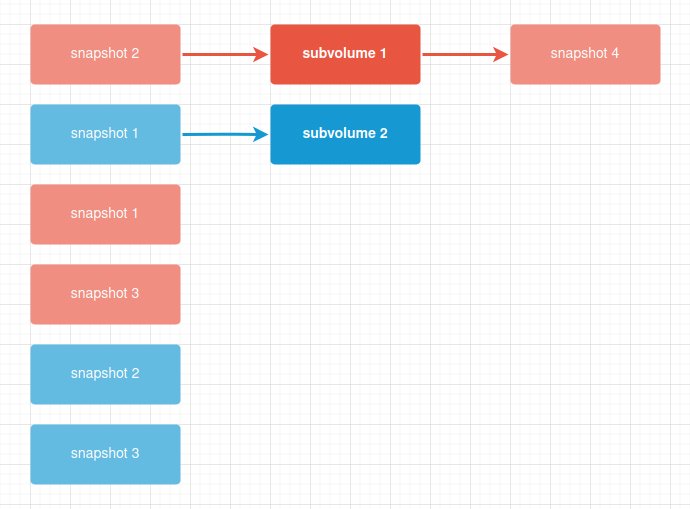

It is still pretty clear what happened due to the color coding. However, that was just there to make the diagram easier to understand. In reality, that differentiation isn’t there, leaving us with this

What are those snapshots of? No idea.

So what happens in practice? Each snapshot management solution for btrfs creates a different method for “tracking” snapshots. For example, Snapper creates a .snapshots subvolume and keeps all the snapshots for a subvolume inside it. It also adds a file with additional metadata along with each snapshot. Of course, every other solution does something completely different which only increases the divergence.

NOTE: In these diagrams I am assuming that we are using snapshots to do the restores but even if you use other methods, the diagrams end up just as messy. They are just messy in different ways.

I was hoping that bcachefs would put a modern spin on this and increase usability. Here is what I found.

First, let’s talk about subvolumes in bcachefs. Subvolumes present as directories in bcachefs which means they share all the disadvantages of btrfs subvolumes but also have some new ones. Subvolumes can’t be mounted and there is no user space tool to list subvolumes. That means:

- Subvolumes are basically indistinguishable from directories. I hope you took good notes when you were creating them.

- You can’t use flat subvolume layouts. The closest you can get is to mount the root somewhere and then use bind mounts. That will require the root to always be mounted somewhere though.

So what about snapshots? Well, since bcachefs snapshots are presented through subvolumes they effectively have all the same limitations as btrfs snapshots but they get some new ones added in.

- You can’t get a list of subvolumes

- There is no way to see how much space each snapshot is uniquely consuming

- There is no way to see what even the immediate parent is

- There is no way to directly boot off a snapshot since they can’t be mounted

Essentially, it is just an unmanaged mess. I always have thought that whoever designed btrfs snapshots didn’t really consider all the use cases for snapshots when they designed them. But bcachefs feels more like they focused on the efficiency of the implementation and completely ignored usability.

To be fair, some of the issues will probably be fixed as the tools mature. For example, I expect that there will eventually be ways to list subvolumes and snapshots. However, some of these issues seem more foundational. Not allowing subvolumes to be mounted directly and not storing good, usable metadata with snapshots seem like problems not likely to be solved unless the developers start thinking of things differently.

My hope that bcachefs could be a viable replacement for zfs is, unfortunately, not likely to be realized.

Of course, I would love to be wrong on any of this so if I have made mistakes on any of this, please let me know.