Small introduction

Howdy folks. I’m somebody who is attempting to try out linux every oftern or so to see if I could move to linux at one point. Now I’ve reached to the point where I’m trying EndevourOS, as I heard it has friendly and helpful community and is arch based (so far my attempts have shown that I have best experience on arch based distros) and to my surprise it seems to be the first distro I’ve tried, that seems to be able to handly my annoying setup.

A brief introduction to my setup

An i7-11800H laptop with integrated Intel graphics + dedicated NVidia RTX 3070 Mobile (damn Nvidia Optimus).

No BIOS option to fully disable the integrated card in BIOS, if I could I would do it as I always set everything to use the Nvidia card.

When I’m at home, I also attach an thunderbult eGPU Nvidia RTX 3080 to the system. Due to the fact that my daily monitor is 3440 x 1440 ultrawide monitor and I also do some gaming, the eGPU is needed to decently run stuff on this setup. The eGPU has been the part that has been a pain in the neck to get properly working (in Manjaro for example, which was my last attempt, it seemed like it was working, but not on it’s full performance - if you want to read about that, here).

I’m using Xorg as on wayland there is no way (that I know at least) to configure the system to use the eGPU only.

Current attempt at the issue

On Endeavour thought, at least first tests indicate to me that the eGPU is working, at least better. However I’ve stumbled upon a strange bug with one the games play a lot and have used as benchmarking so far: Snowrunner (runs through proton).

When I have no eGPU connected, the game runs just fine, but when I have the eGPU connected, the game opens to black screen and immediately closes.

Proton log for the game:

I also attempted to launch the game with PROTON_USE_WINED3D=1 %command%, while it reached the main menu with that method, once i start loading the game itself it does nothing, just sits on black screen.

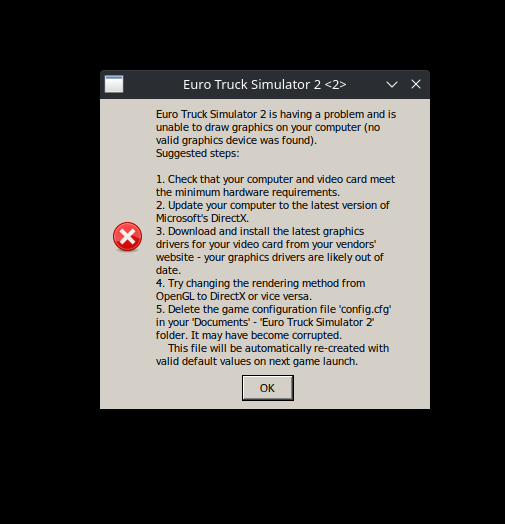

I also tested Euro Truck Simulator 2 through Proton. When I launch the game using DirectX version, I’m greeted with this error:

When I use Proton and OpenGL launch option, the game works. If don’t use proton at all, native linux method, the game also works. So it seems to me the issue is related to directx (so dxvk essentially?)

How my eGPU is configured

- Installed:

- optimus-manager

- optimus-manager-qt

- plasma-thunderbolt

-

Configured Optimus manager to always run using Nvidia GPU. This generates into

/etc/X11/xorg.conf.d10-optimus-manager.conf

Section "Files" ModulePath "/usr/lib/nvidia" ModulePath "/usr/lib32/nvidia" ModulePath "/usr/lib32/nvidia/xorg/modules" ModulePath "/usr/lib32/xorg/modules" ModulePath "/usr/lib64/nvidia/xorg/modules" ModulePath "/usr/lib64/nvidia/xorg" ModulePath "/usr/lib64/xorg/modules" EndSection Section "ServerLayout" Identifier "layout" Screen 0 "nvidia" Inactive "integrated" EndSection Section "Device" Identifier "nvidia" Driver "nvidia" BusID "PCI:1:0:0" Option "Coolbits" "28" Option "TripleBuffer" "true" EndSection Section "Screen" Identifier "nvidia" Device "nvidia" Option "AllowEmptyInitialConfiguration" Option "AllowExternalGpus" EndSection Section "Device" Identifier "integrated" Driver "modesetting" BusID "PCI:0:2:0" EndSection Section "Screen" Identifier "integrated" Device "integrated" EndSection -

Created my own script to detect eGPU (which I’ve built over a year on varius distros by piecing together multiple existing scripts) and copy needed configuration files:

In/etc/X11/xorg.conf.dI’ve created file:11-nvidia-egpu.conf

Section "ServerLayout" Identifier "egpu" Screen 0 "nvidiaegpu" Inactive "nvidia" Inactive "integrated" EndSection Section "Device" Identifier "nvidiaegpu" Driver "nvidia" BusID "PCI:5:0:0" Option "AllowEmptyInitialConfiguration" Option "AllowExternalGpus" EndSection Section "Screen" Identifier "nvidiaegpu" Device "nvidiaegpu" Option "metamodes" "nvidia-auto-select +0+0 {ForceCompositionPipeline=On}" Option "TripleBuffer" "on" Option "AllowIndirectGLXProtocol" "off" EndSectionBasically the script functions so that, If eGPU is detected, this file is copied to xorg.conf.d folder

01-egpu.conf

Section "ServerFlags" Option "DefaultServerLayout" "egpu" EndSection

More technical details

Some technical info to help debug

$ lspci -k | grep -EA3 'VGA|3D|Display'

00:02.0 VGA compatible controller: Intel Corporation TigerLake-H GT1 [UHD Graphics] (rev 01)

DeviceName: Onboard - Video

Subsystem: Gigabyte Technology Co., Ltd Device 78cc

Kernel driver in use: i915

--

01:00.0 VGA compatible controller: NVIDIA Corporation GA104M [GeForce RTX 3070 Mobile / Max-Q] (rev a1)

Subsystem: Gigabyte Technology Co., Ltd Device 78cc

Kernel driver in use: nvidia

Kernel modules: nouveau, nvidia_drm, nvidia

--

05:00.0 VGA compatible controller: NVIDIA Corporation GA102 [GeForce RTX 3080] (rev a1)

Subsystem: Gigabyte Technology Co., Ltd Device 405d

Kernel driver in use: nvidia

Kernel modules: nouveau, nvidia_drm, nvidia

nvidia-smi

Fri Apr 15 13:32:12 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.60.02 Driver Version: 510.60.02 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| N/A 50C P8 17W / N/A | 6MiB / 8192MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce ... Off | 00000000:05:00.0 On | N/A |

| 0% 50C P5 42W / 320W | 1769MiB / 10240MiB | 35% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 813 G /usr/lib/Xorg 4MiB |

| 1 N/A N/A 813 G /usr/lib/Xorg 831MiB |

| 1 N/A N/A 947 G /usr/bin/kwin_x11 132MiB |

| 1 N/A N/A 1022 G /usr/bin/plasmashell 93MiB |

| 1 N/A N/A 1562 G ...e/Steam/ubuntu12_32/steam 45MiB |

| 1 N/A N/A 1609 G ...007348006062947779,131072 217MiB |

| 1 N/A N/A 1700 G ...ef_log.txt --shared-files 339MiB |

| 1 N/A N/A 4298 G /usr/lib/kf5/kioslave5 3MiB |

+-----------------------------------------------------------------------------+

neofetch

./o. user@linuxtest3

./sssso- ----------------------------

`:osssssss+- OS: EndeavourOS Linux x86_64

`:+sssssssssso/. Host: AORUS 17G XD 993AD

`-/ossssssssssssso/. Kernel: 5.17.3-arch1-1

`-/+sssssssssssssssso+:` Uptime: 37 mins

`-:/+sssssssssssssssssso+/. Packages: 1133 (pacman)

`.://osssssssssssssssssssso++- Shell: bash 5.1.16

.://+ssssssssssssssssssssssso++: Resolution: 2560x1440, 1920x1080, 3440x1440

.:///ossssssssssssssssssssssssso++: DE: Plasma 5.24.4

`:////ssssssssssssssssssssssssssso+++. WM: KWin

`-////+ssssssssssssssssssssssssssso++++- Theme: [Plasma], Breeze [GTK2/3]

`..-+oosssssssssssssssssssssssso+++++/` Icons: [Plasma], breeze-dark [GTK2/3]

./++++++++++++++++++++++++++++++/:. Terminal: konsole

`:::::::::::::::::::::::::------`` CPU: 11th Gen Intel i7-11800H (16) @ 4.600GHz

GPU: NVIDIA GeForce RTX 3080

GPU: NVIDIA GeForce RTX 3070 Mobile / Max-Q

GPU: Intel TigerLake-H GT1 [UHD Graphics]

Memory: 4743MiB / 31838MiB

Ask for help

If anybody can help me in finding the issue, it would be appreciated. While I’m not scared of linux & command line, I do not know a lot of stuff so try to approach me with that in mind (if you want me to to test or output something, it would be appreciated if you can include the command to use).

Before going to report bug in dxvk, maybe I’ve done something wrongly on my end? Maybe I’m missing some essential library or sth? The only Arch based linux I’ve used so far is Manjaro and I know that had the hapit of doing a lot of stuff for you.