Greetings lovely community,

I’ve only ever known ext4, but switched to btrfs earlier this year. I’ve seen different distros/users optimize btrfs in slightly different ways. When I installed btrfs using Calamares from the Apollo 22-1 iso, I just used the default settings for btrfs with a 8GB (no hibernate) swap partition for my 256GB SSD. I’ve been wondering though if I should/need to add any optimizations for my btrfs. or if the defaults that EndeavourOS used from the latest iso are enough for the average user.

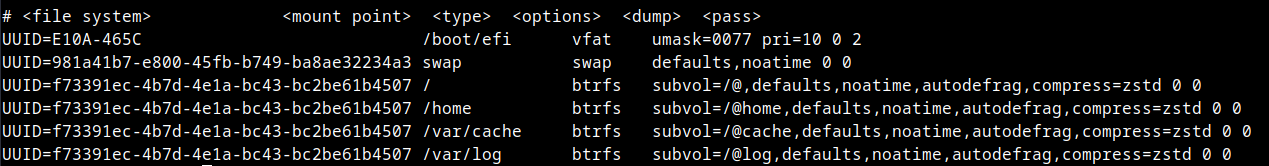

sudo nano /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=EC97-73E1 /boot/efi vfat defaults,noatime 0 2

UUID=112e02fc-dd9b-419a-a575-bc79354c86ab / btrfs subvol=/@,defaults,noatime,compress=zstd 0 0

UUID=112e02fc-dd9b-419a-a575-bc79354c86ab /home btrfs subvol=/@home,defaults,noatime,compress=zstd 0 0

UUID=112e02fc-dd9b-419a-a575-bc79354c86ab /var/cache btrfs subvol=/@cache,defaults,noatime,compress=zstd 0 0

UUID=112e02fc-dd9b-419a-a575-bc79354c86ab /var/log btrfs subvol=/@log,defaults,noatime,compress=zstd 0 0

UUID=b479a7af-fa4d-4bb8-98df-2ab4ae7a9b78 swap swap defaults 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0

ssd, noatime, space_cache, commit=120, compress=zstd, discard=async, nodatacow, and autodefrag are the optimizations that I know of. As you can see from my fstab above, I’m using defaults, noatime, and compression=zstd already for the btrfs volumes. Calamares also created swap, vfat, and tmpfs volumes, but I do not know if I need/should have to add optimizations to them as well or if the optimizations are meant for btrfs only.

I’ve got an SSD so I’ve already enabled the SSD Trim timer via systemctl enable fstrim.timer, so I’m wondering if I should/need to add the ssd optimization option to my btrfs as well or if that’s some sort of overkill I’m not sure so feel free to clarify anything for me.

I understand a lot of this may be subjective and depend on the user, I’m just looking for practical options to consider, keeping things lean, smooth, and fast; if something is a good idea or good practice, I’d like to enable it. If something is not really needed or won’t notice any difference (or causes decrease in performance for example), then I can take it or leave it is my philosophy.

At the very least I’m probably going to want to add sdd and space_cache, but I’m wondering how many of these optimizations I should/need to really add, so I’d appreciate any thoughtful guidance into the matter and thanks for taking the time to read and reply, any responses are always appreciated very much.